As we close out a year that started with a conspiracy-fuelled assault on the US Capitol, and is ending with all the buzz around the Metaverse; as we find ourselves wondering how fringe opinions became mainstream and Generals warn of impending civil war, I thought it would be helpful to lay out simply how we got here, from a technical perspective.

For those aghast at the vulnerability that log4j represents, have a seat; we have a worse one in our heads.

I’ll try to keep it short, but if you’re in a rush…

TL/DR; we accidentally became cyborgs, and our brains have been hacked through old, unpatched flaws that allowed others to tell us what to think. This is an outline of those human design flaws and how to exploit them.

First, the unpatched flaw in human cognition, laid out in a few steps:

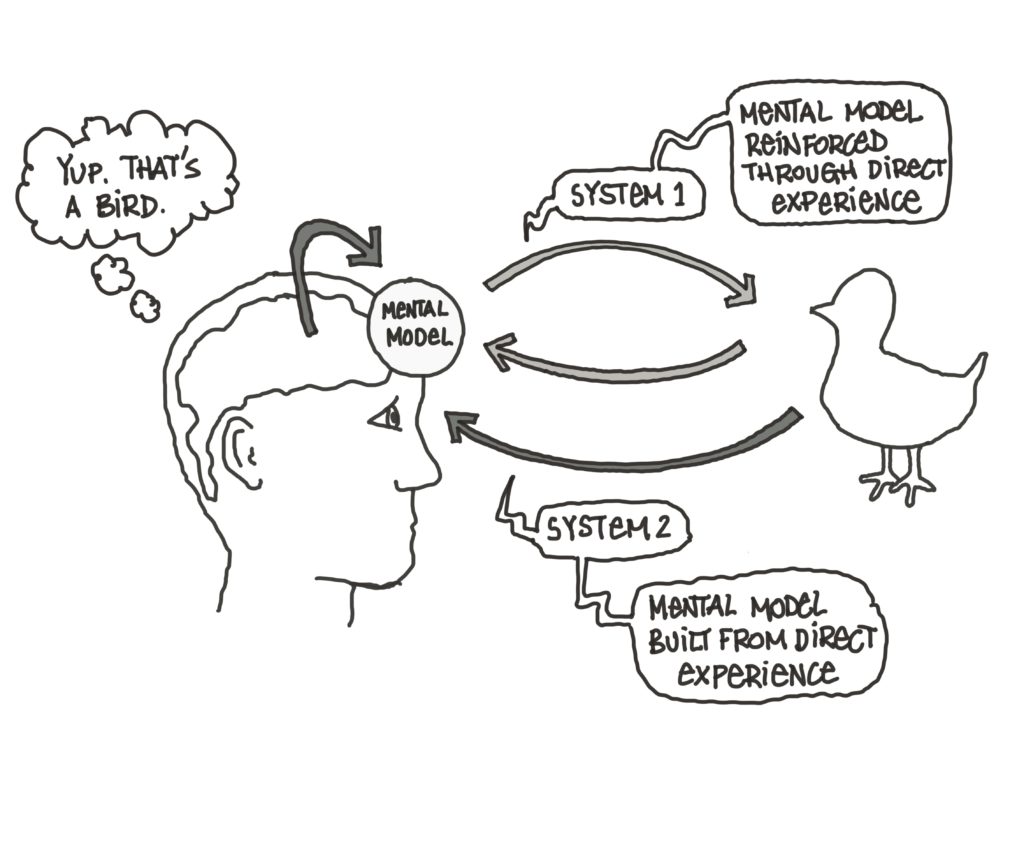

1.) Humans operate mostly on autopilot, guided by mental models generated from infancy to make navigating the world easier

2.) Those models are created through hard, direct experience (that’s sharp, that hurts, that one burns, that is tasty)

3.) Once we have a mental model, we don’t revisit it again unless we have to…we just use it for autopilot (oops, there was one more stair on this staircase than I thought…attention!)

4.) Those mental models apply not just to the physical world, but also to the social and conceptual world (hence “what is the sound of one hand clapping”)

This flawed, but useful system has served our species well enough (we thought up science, cities, supply chains and music) with some notable drawbacks (racism, wars, genocide, climate change).

While in the past this flaw left us vulnerable to xenophobia and charismatic leaders, it also created a much bigger opening: an unprotected opening for a human/machine interface.

Mental models and Kahneman’s whole construct of “System 1 and System 2” (reflexive use of mental models and considered thought, respectively) is based on a system of inputs and outputs, which, especially in the social and conceptual world, is vulnerable to man-in-the-middle attacks (I’ve kept this expression gendered, because, well, in this case, the perpetrators generally fit the idiom).

Here’s the hack:

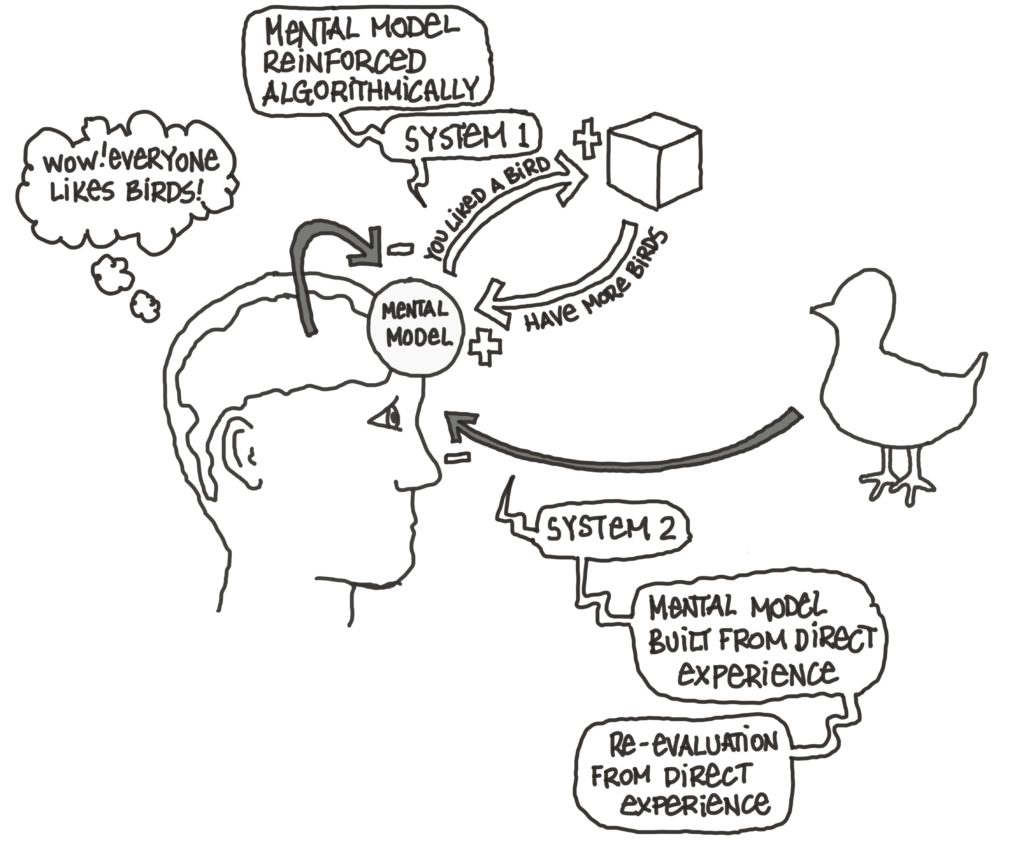

1.) In digitally mediated interactions (web searches, social sharing, reading, looking at photos, GPS navigation, shopping, adjusting your thermostat) you can watch patterns to find the edges of someone’s mental models (habits, sexual preference, gender identity, political views, cat/dog person)

2.) Staying within “n” degrees of that mental model allows injection of information that won’t trigger conscious thought

3.) Adding peripheral information allows exploitation of “availability and representativeness” heuristics (if I see it a lot, there must be a lot of it)

4.) Most people are like at least some other people; finding similar groups allows you to test how far you can push similar groups.

And there it is – though this is obviously the simplified version.

I’ve laid this out in a few simple models – unmediated, mediated and captured.

As our information ecosystem becomes increasingly mediated through digital channels, it is important to understand that our cognition is co-evolving in response to our sources of stimuli. The natural environment we evolved in was indifferent to us, but the digital environment is not. Go ahead; test the matrix. Change your behaviour on the platforms you use, and see how it shifts.

Now, how the stimuli are served to the humans entirely depends on the priorities coded in to the algorithm. What is it optimizing for? Is your GPS teaching you the fastest routes, or the ones that pass by the most McDonalds? Is your news feed optimizing for balanced viewpoints, or engagement? Are search results based on rigour and authority, or sponsorship?

Unfortunately, for this software update, you’re going to have to patch yourself.